Towards a New Generation of Augmented Reality Assistance Systems for Procedural Tasks

In a recently published dissertation we address AR and context-aware AR support systems' design, functionality, and impact on task performance. The work also investigates the integration of eye tracking technology and aims to build a comprehensive system model that describes the interplay between human behavior, AR, and context-aware AR.

Procedural tasks are common in many professions and require precise execution, especially when working with complex machines, equipment, or patients. Augmented reality (AR) head-mounted displays (HMDs) have shown promise in effectively assisting operators with such tasks. However, state-of-the-art AR systems only provide static information to the operator without considering their current situation or level of experience. Context-aware AR support has been introduced to improve these systems’ support, especially for complex operations. By comparing user behavior inferred by real-time analysis of sensor data (e.g., eye tracking) with a process model, the current context and required support can be inferred to adapt displayed information and to provide real-time feedback.

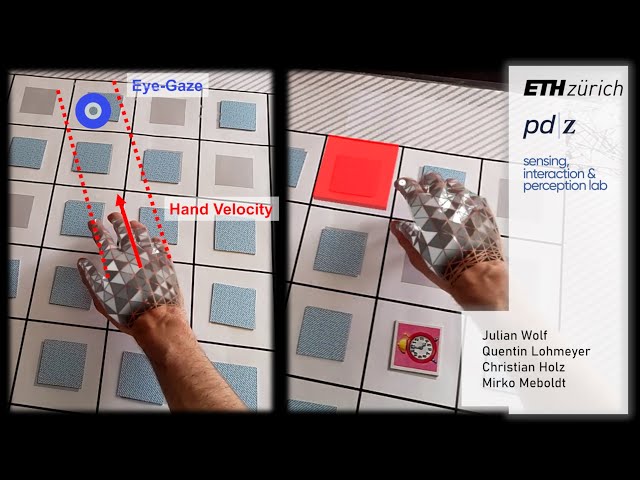

The thesis Towards Advanced User Guidance and Context Awareness in Augmented Reality-guided Procedures includes three studies that investigated the effectiveness of augmented reality (AR) and context-aware AR support systems in performing procedural tasks. Study I compared the benefits of contextual information provided by AR with those of traditional media for ECMO cannulation training, demonstrating clear benefits of AR while highlighting areas for improvement with context-aware AR. Study II addressed effective visualization strategies in providing real-time feedback to operators in simulated spine surgery, demonstrating that real-time feedback levels the performance of novice and experienced operators while identifying preferred AR visualizations. Study III demonstrates the potential of eye and hand tracking features to predict and prevent operator errors before they occur, using a memory card game as an example.

The work draws several important conclusions. First, context-aware AR support significantly improves procedural outcomes and can raise the performance of less experienced operators to the level of experts. Second, real-time analysis of hand-eye coordination patterns enables predictive AR support and error prevention, which could provide an additional safety net for operators in the future. The findings of this thesis pave the way for the development of improved support systems for procedural tasks, ensuring higher-quality task execution, increased safety, and enhanced outcomes.

The doctoral thesis Towards Advanced User Guidance and Context Awareness in Augmented Reality-guided Procedures is available for download under this Link.

For more information, please contact Julian Wolf.